DELL Compellent – Auto Storage Tiering

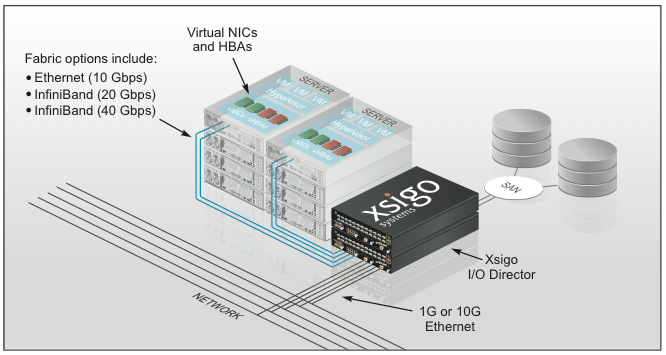

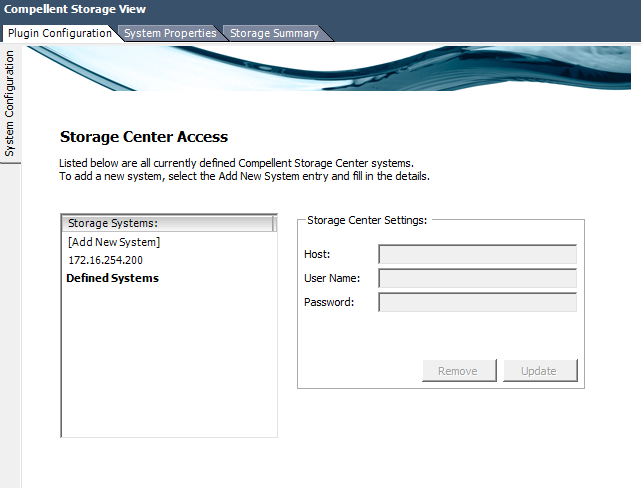

In two days i have had the opportunity to test the Compellent SAN solution and i find it really cool and smooth. When integrating it with your vCenter you get the single pane of gui to handle the whole solution. You have to on every vmware client install the Compellent plugin to be able to administer the compellent and get the tabs that are plugin specific, you will also have to add the compellent management address and also a user with password and of course some rights to do SAN stuff.

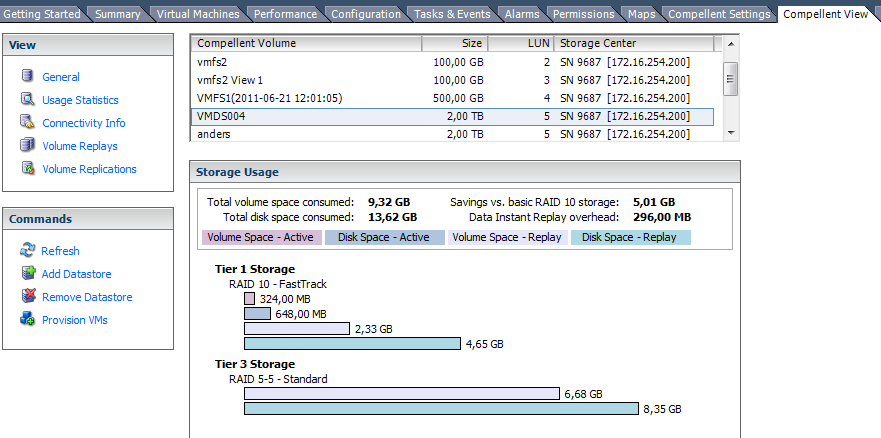

After adding the Storage Center you get the following tabs:

As you can see on the Compellent view, you can add and remove datastores, if this is done in here the compellent plugin will also format the datastore with vmfs and rescan datastores on the hosts. Also as you see on this screenshot you can for each datastore see where your data is and how much of it resides where, Tier 1 is the fastest and if you have setup with more than two different hdd you will get a Tier 2 and a Tier 3. The active blocks are moved to faster or slower disk depending their demands.

With the Replay function you can get SAN backup functionality and also for full consistent backup you can install a agent in the VMs to get them to prepare a vss snapshot before running the replays. Really cool to be able to take SAN snapshots this way.

another thing as you can see also on the screenshot is that the datastore is 2 TB but only 9,32 GB is used! Of course you have to size your solution to cope with the amount of data that you are going to store.

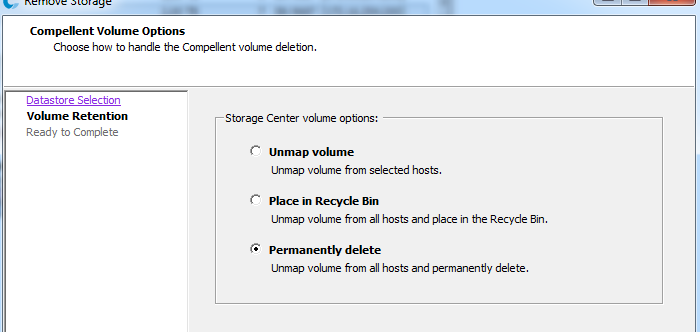

When deleting a Datastore you get the following choices, if you put it in the Recycle bin, you can get it back as long as not the space will be needed by some other data, as long as there is space in the Compellent you can restore the datastore and that can be handy if you had some VMs that you need later and did not migrate to another datastore.

The Compellent solution is very powerfull and has much more functions than i have highlighted here, i hope that i will get some more time to test and use it in the near future!