Bug in VMM 2012 SP1 disconnect VM nic after cold migration?

Yesterday I was at a customer and working on configure their off site Hyper-V cluster. I was setting up live migration settings to be able to do shared nothing live migrate the VM´s between the data centers. I was setting up kerberos authentication and also delegation in the active directory but did not think of the 10 hours (600 minutes) time that a kerberos ticket could live and got some errors regarding constrained delegation, as it says if reading a bit more carefully in this technet page on how to configure live migration outside of clusters :”A new kerboros ticket has been issued. “, I did not think of this at first and checked the hosts settings and the active directory objects twice 😛 but it did not work and I did not think of the time…. If you want to purge the kerberos tickets you can use the klist command line tool.

Well during the error search I had to test to do a cold migration from SC VMM between the clusters and that looked like no problem at all. It should also be said that both clusters was configured with the same logical network, vm networks, logical switch and uplink so it was the same conf! SC VMM have been updated with the latest CU 4.

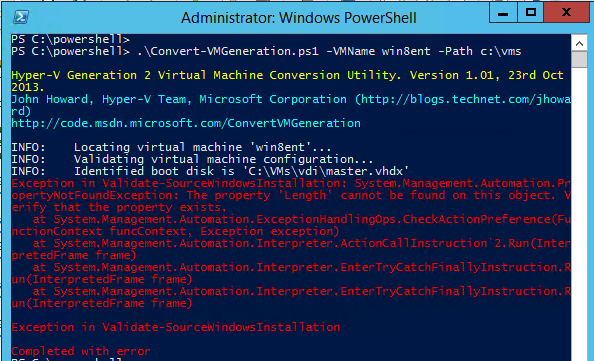

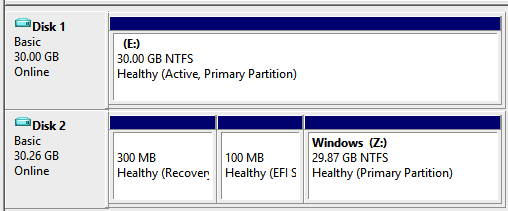

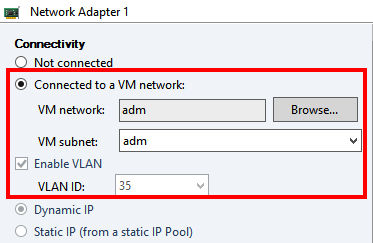

When the VM had been migrated i started it and tried to ping the IP address but got no response.. strange I thought, looked in VMM on the properties on the VM and it said that the network card was connected:

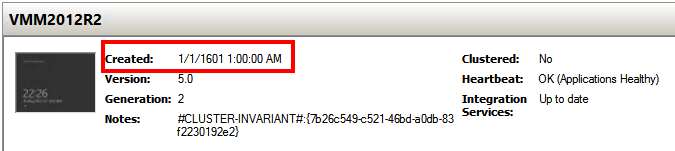

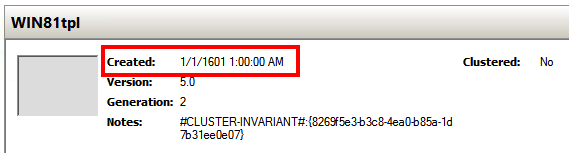

But still inside the VM it said not connected,

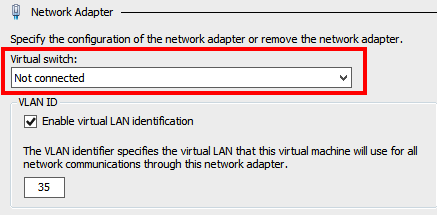

And then going into the Hyper-V manager and looking at the VM´s properties from there I could also see that it was not connected. I did a VM refresh also in VMM but it did not change the connection status on the VM object to reflect the status as the screen dump below from the properties in the Hyper-v Manager:

Once I connected it to the (logical) virtual switch on the host with Hyper-V Manager it started to respond to ping of course.

I will continue to exam this further and maybe it has been fixed in the VMM 2012 R2.