Win 8 Server dev preview and Hyper-V NIC team

There is quite a buzz out on twitter and blogs about the new features that has come to Windows 8 and the new Hyper-V version. I want to give you a little heads up about how it works to create network team with NICs (yes it works with different nic cards. in my case a Intel and a Broadcom)

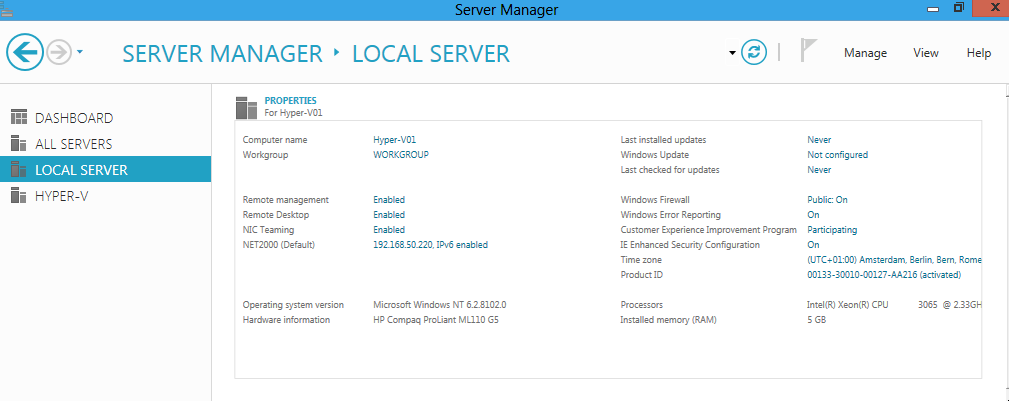

I have now installed the server on my test-machine in our office and was eager to test the NIC teaming, at first i did not understand how it was working and tried to bind two nics together in the network connections window in the control panel, as i later realized and read in Aidan Finns´s blog, that it is done through the LBFOAdmin.exe (this is opened when pressing Nic Teaming Enabled/Disabled)

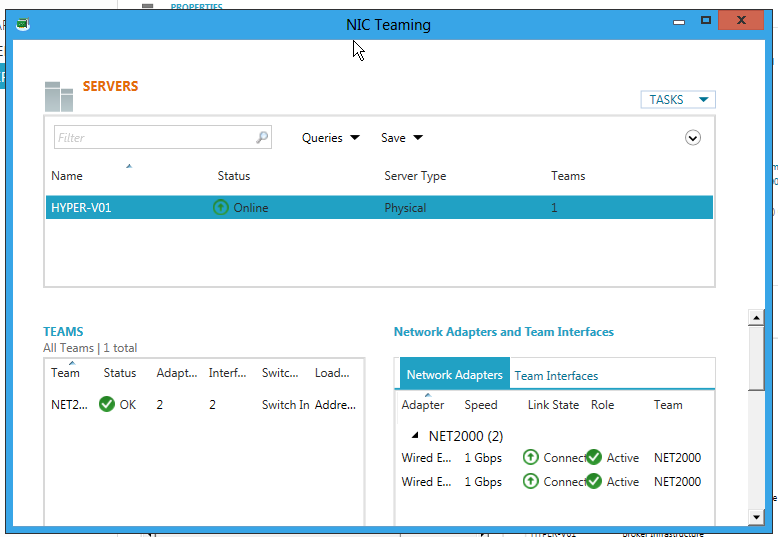

There you have to highligt your server to configure it, as the new server manager can handle remote servers and you can configure several workloads at the same time and you do not have to log in to each server to administer it.

I have named my team to NET2000 and added the two nics, i have also set it to be switch independent (i have actually set it in a simple 5 port switch), you can also chose LACP or Static Teaming. For Load Distribution mode you can chose Address Hash or Hyper-V port (now i am sharing the team with the management and a hyper-v switch so i am using the Address Hash.

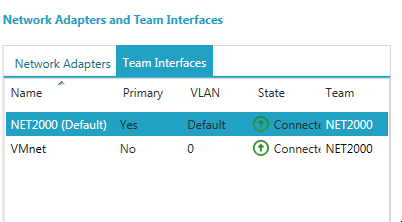

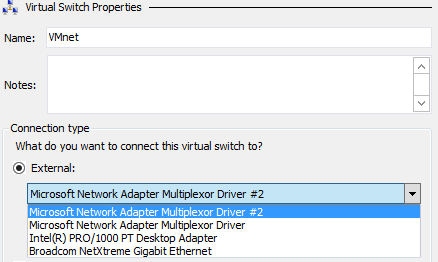

As yo can see i can then add several virtual nics with different vlan id. I really hope that the fix one issue though, as you can see here i have a virtual nic interface called VMnet, when i then want to add this in the hyper-v manager it does have a different name as you can see in the next screenshot. It would have been wonderful to be able to se the Name also in the virtual switch manager.

As i before had to use the same network cards from the same manufacture and use their teaming software this is a giant step forward with the win 8 and the built in teaming functions. One thing to test later when i get my hands on a nic that can handle SR-IOV is how that feature works with a team, but that is another blog post!