Updated powerCLI function for setting HA Admission control policy in percent with number of hosts

I got the question from a colleague if i could add a parameter in my function, no problem, just some dividing..

So you can either set percent CPU/Memory or number of hosts and from that get a percent on the policy. I have not taken care of the case if you have more than 100 hosts in your cluster (as this is not possible, at least not yet 😉 )

function Set-HAAdmissionControlPolicy{

<#

.SYNOPSIS

Set the Percentage HA Admission Control Policy

.DESCRIPTION

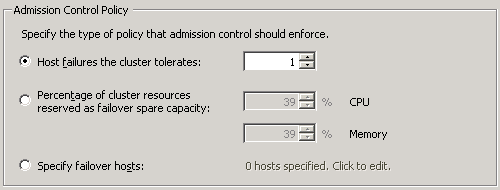

Percentage of cluster resources reserved as failover spare capacity

.PARAMETER Cluster

The Cluster object that is going to be configurered

.PARAMETER numberHosts

When this parameter is set the percentage is set based on number of hosts in cluster

.PARAMETER percentCPU

The percent reservation of CPU Cluster resources

.PARAMETER percentMem

The percent reservation of Memory Cluster resources

.EXAMPLE

PS C:\> Set-HAAdmissionControlPolicy -Cluster $CL -percentCPU 50 -percentMem 50

.EXAMPLE

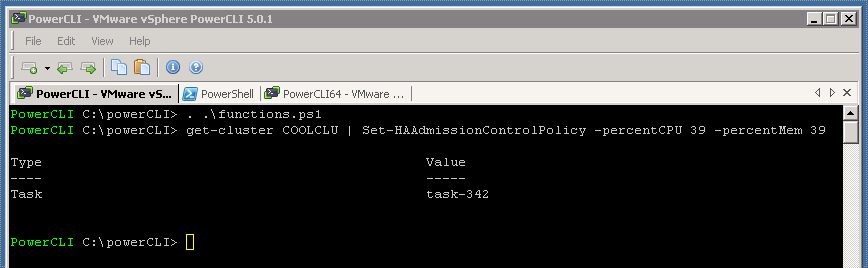

PS C:\> Get-Cluster | Set-HAAdmissionControlPolicy -percentCPU 50 -percentMem 50

.EXAMPLE

PS C:\> Set-HAAdmissionControlPolicy -Cluster $CL -numberHosts 5

.NOTES

Author: Niklas Akerlund / RTS

Date: 2012-01-25

#>

param (

[Parameter(Position=0,Mandatory=$true,HelpMessage="This need to be a clusterobject",

ValueFromPipeline=$True)]

$Cluster,

[int]$numberHosts = 0,

[int]$percentCPU = 25,

[int]$percentMem = 25

)

if(Get-Cluster $Cluster){

$spec = New-Object VMware.Vim.ClusterConfigSpecEx

$spec.dasConfig = New-Object VMware.Vim.ClusterDasConfigInfo

$spec.dasConfig.admissionControlPolicy = New-Object VMware.Vim.ClusterFailoverResourcesAdmissionControlPolicy

if($numberHosts -eq 0){

$spec.dasConfig.admissionControlPolicy.cpuFailoverResourcesPercent = $percentCPU

$spec.dasConfig.admissionControlPolicy.memoryFailoverResourcesPercent = $percentMem

}else{

$spec.dasConfig.admissionControlPolicy.cpuFailoverResourcesPercent = 100/$numberHosts

$spec.dasConfig.admissionControlPolicy.memoryFailoverResourcesPercent = 100/$numberHosts

}

$Cluster = Get-View $Cluster

$Cluster.ReconfigureComputeResource_Task($spec, $true)

}

}