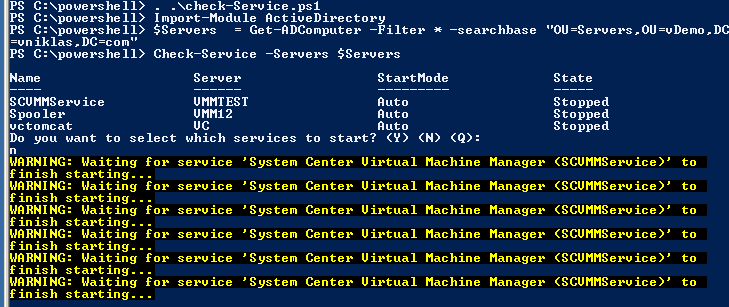

Upgrading my Win8 beta server to Windows 2012

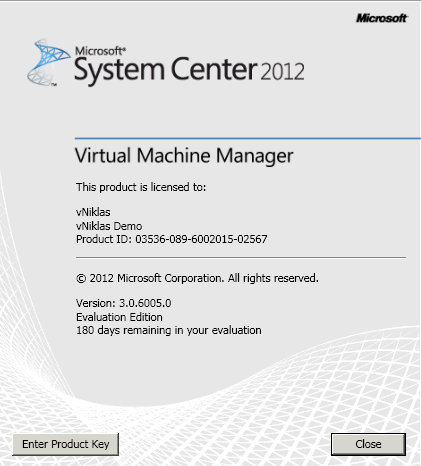

as yesterday the RC of the Windows 2012 came I thought i would give it a try.

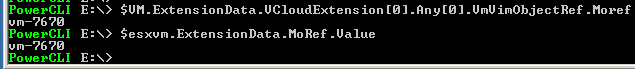

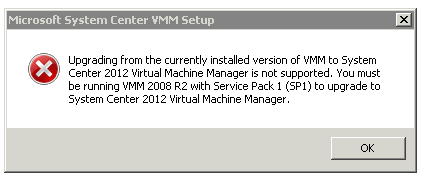

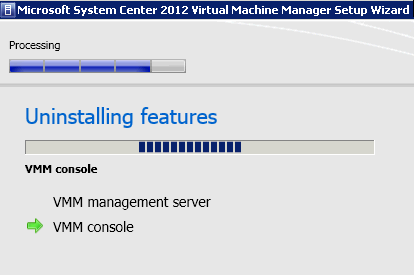

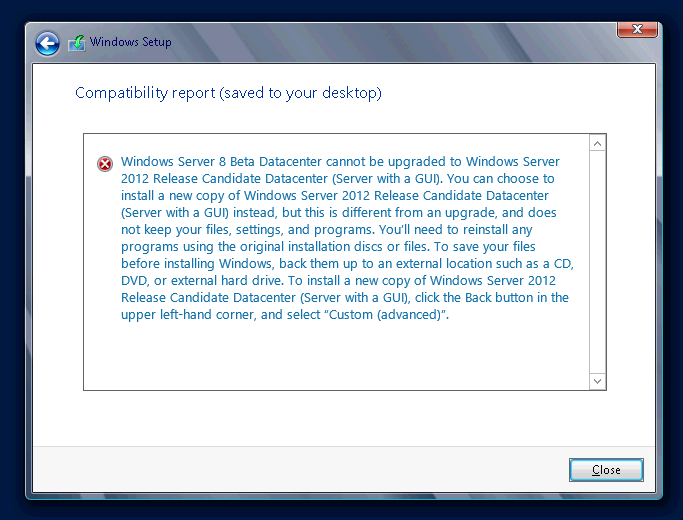

First of all, i wanted to test if I could upgrade my win8 beta server to the Windows 2012 RC but as you can see on the picture from the installation this is not possible. So what to do, as I had two nodes i live migrated my VM´s to the other win8 beta and did a fresh install.

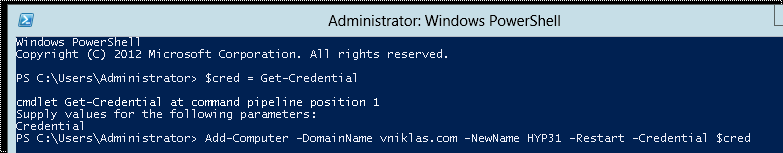

When it was finished I wanted to add the server to the domain and of course this should be done with powershell. When installing you all know that the OS get a not so friendly name so with the parameter -NewName I rename it at the same time as I add it to the domain.

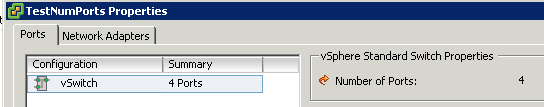

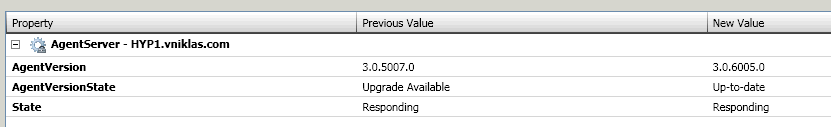

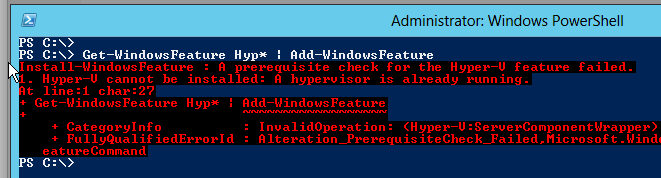

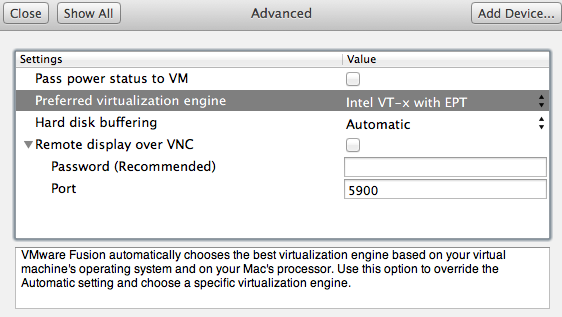

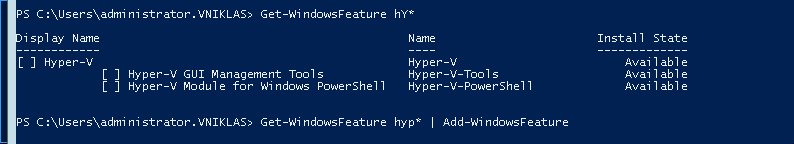

Next step was of course to add the Hyper-V role,

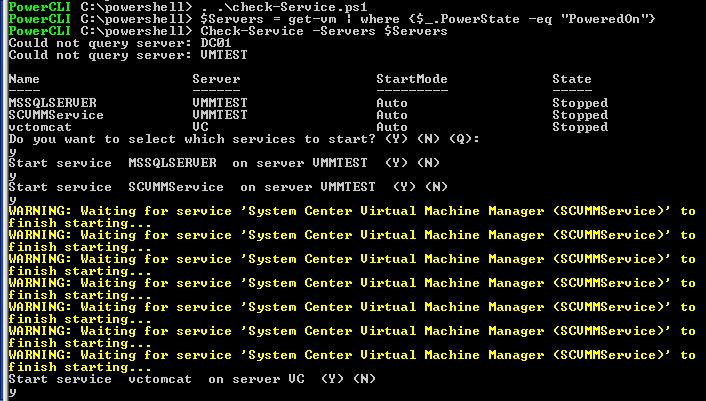

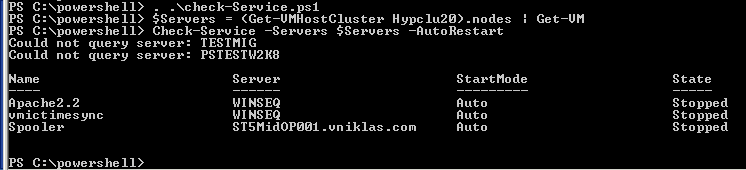

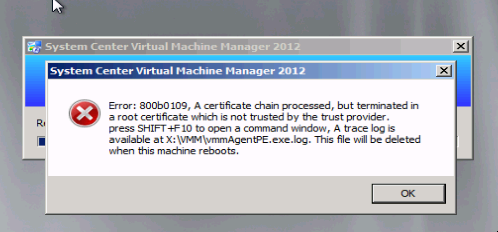

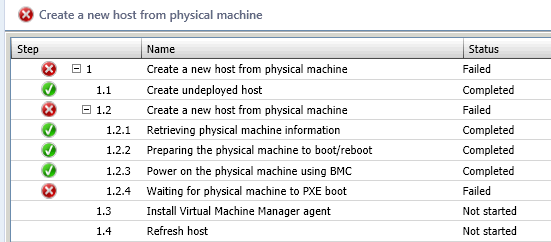

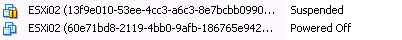

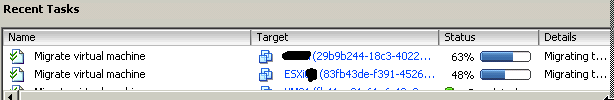

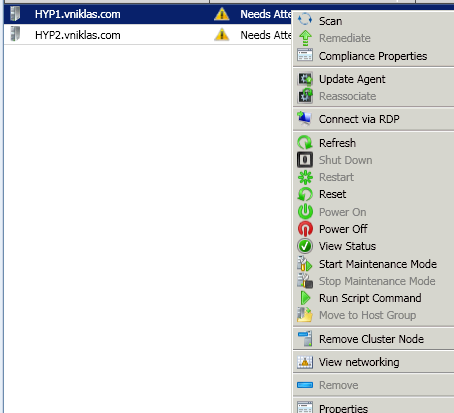

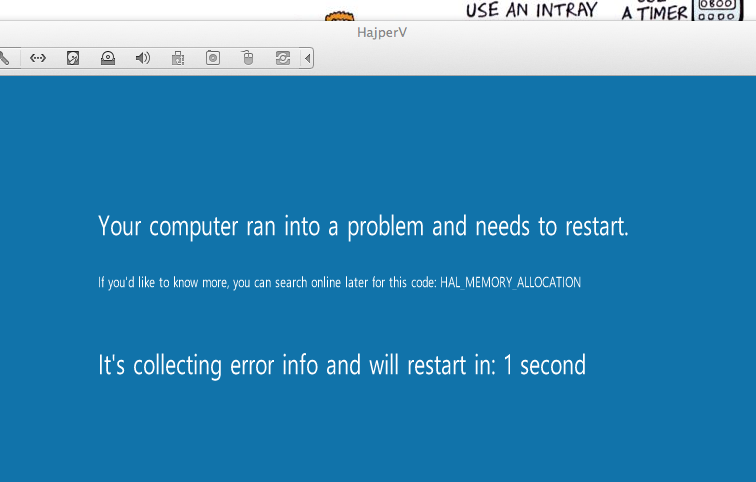

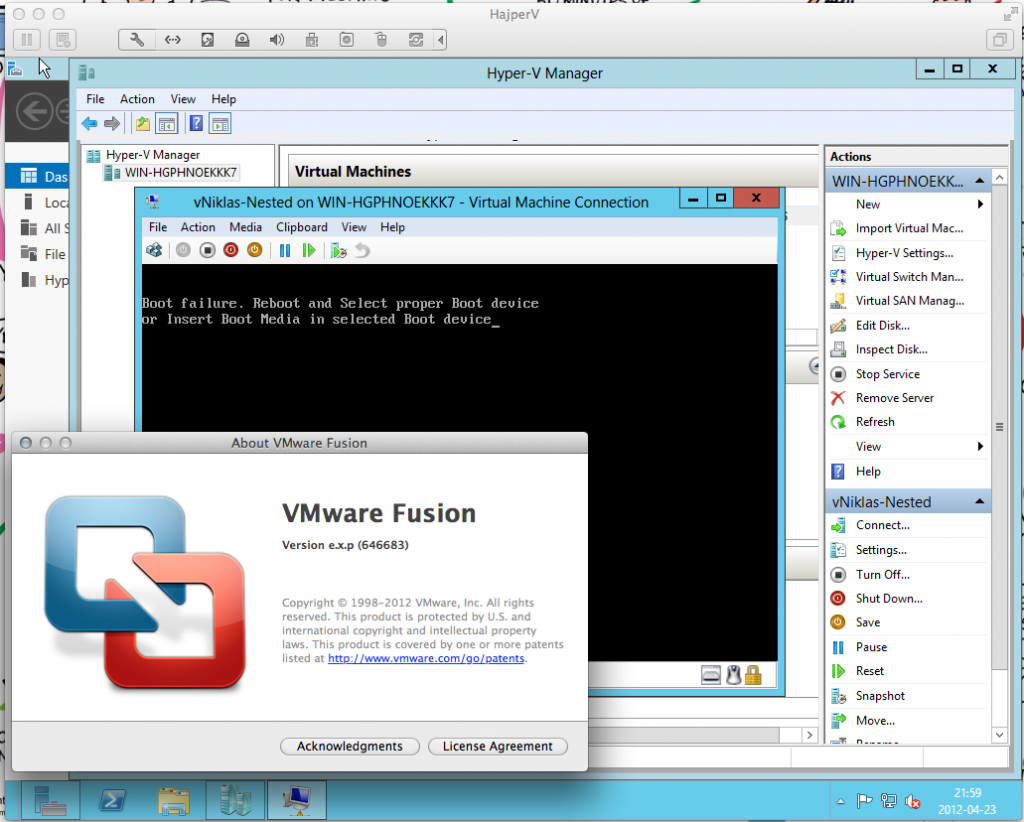

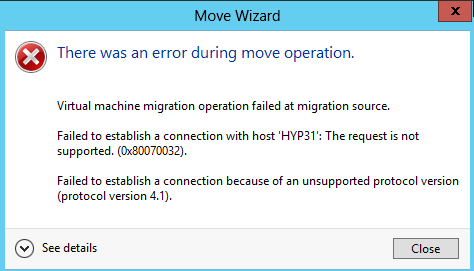

And what to do next, well i want to live migrate my VM´s from my other node but that was unfortunately not possible 🙁 cause of some kind of mismatch with the protocol as you can see on my next screendump

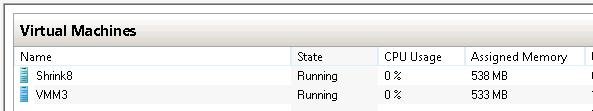

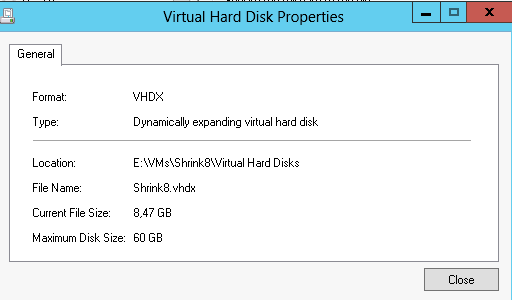

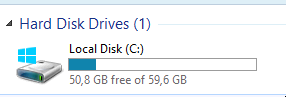

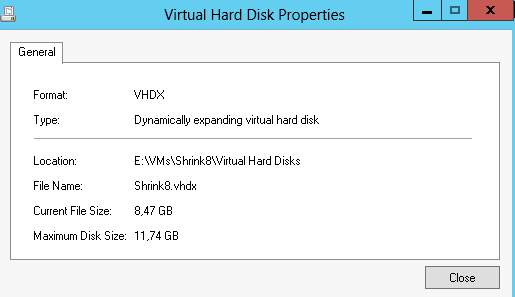

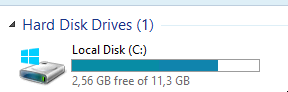

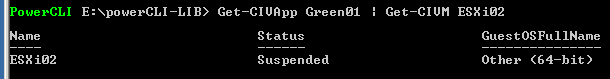

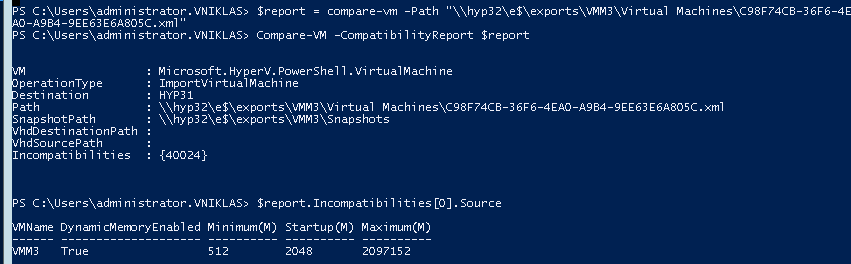

Ok so my next plan was to export the VM´s and then import them, this also with powershell, but as the win8 beta set the dynamic memory maximum to 2 TB i got a configuration issue so I had to handle that before i had an successfull import

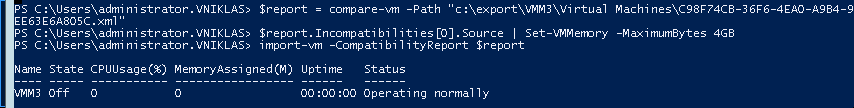

After this I could import it ( notice though I could not use the parameter -copy when using -CompabilityReport, so I had to manually copy the VM to the Hyp31 server )

Good luck in your migration to the Windows 2012 RC 🙂