VMware vSphere V and the licensing

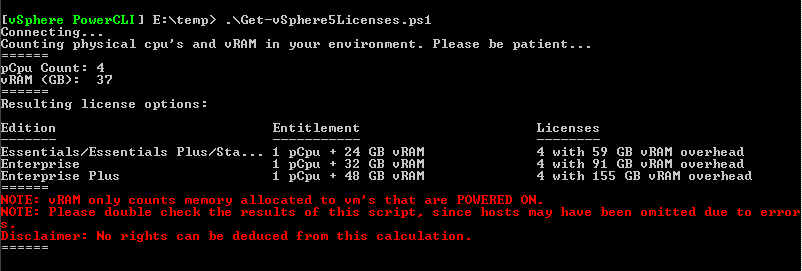

I have now tested the script that Hugo Peeters has made for checking what licensing needed with a vSphere platform when upgrading to V,

Of course this is a small platform and we do not have so much machines running, but the point is that it is a cool script that gives you a hint where you are and what your platform need in amount of licensing.

One thing my colleagues has missed and i wanted to touch and highlight is the vRAM and the pooling, i think it is well documented in the vSphere licensing, pricing and packaging document

The new licensing model is as follows

- No more restrictions on cores

- No max physical RAM limit

- You still need one license/pCPU

- Not allowed to mix different vSphere editions in the same vRAM pool, if using more than one edition managed with vCenter it will create different vRAM pools

for each license model there is a vRAM entitlement 24 GB for standard, 36 GB for Enterprise and 48 GB for Enterprise+, these are shared when connected to a vCenter so if you have a virtual machine on a host with 2 pCPU 192 GB physical RAM (with E+ you have 96 GB vRAM) and this VM has been configured with 128 GB vRAM and in your vmware vSphere cluster that this host resides have 3 other hosts with same setup and that will give you 384 GB vRAM in the pool, 384 – 128 equals 256 GB left to use for other VMs before bying more licenses. Also if you have a linked vCenter and hosts with vRAM that is also included in the pool to be used. What i am trying to say is that although you have used more vRAM than assigned for one host you are still compliant as it is part of a pool.

As in all virtualization design you must calculate for host failures and its vRAM can be used when one host is down for maintenance or failure.

In the above example you can add more licenses for getting more vRAM, these licenses can later be used for adding a new host and for that physical CPUs.

Hope this gives some more light in the jungle